What is Truth GPT or DALL-E 2? | Elon Musk

Author: neptune | 20th-Apr-2023

Elon Musk's company, OpenAI, is reportedly working on a new language model called “DALL-E 2” and “Truth GPT”. This model is designed to accurately identify factual inaccuracies and logical inconsistencies in a given piece of text, making it a potentially valuable tool in the fight against fake news and misinformation online. In this article, we will explore what Truth GPT is, how it differs from other AI models like ChatGPT, and the potential applications and limitations of this new technology.

What is Truth GPT?

Truth GPT is a new language model developed by OpenAI that aims to better distinguish between true and false statements. Unlike previous AI models, such as GPT-3, that generate text based on statistical patterns and probabilities, Truth GPT is designed to identify factual inaccuracies and logical inconsistencies in a given piece of text.

How is it different from ChatGPT and other AI models?

ChatGPT and other language models like it are designed to generate natural language responses to a given prompt or query. They do this by analysing large amounts of text data and using statistical algorithms to predict the most likely sequence of words to follow a given input. These models are highly effective at generating coherent and natural-sounding text, but they are not explicitly designed to determine the truth or falsehood of a given statement.

Truth GPT, on the other hand, is specifically designed to evaluate the veracity of a given statement or piece of text. It does this by analysing the statement's content, context, and logical coherence, and comparing it to a large database of factual information. The model can also identify logical fallacies, circular reasoning, and other forms of faulty logic.

Why is Truth GPT important?

With the rise of fake news, propaganda, and misinformation online, it has become increasingly difficult for individuals and organisations to distinguish between fact and fiction. This has led to a growing need for tools and technologies that can help us evaluate the veracity of the information we encounter.

Truth GPT represents a significant step forward in this regard. By providing a tool that can accurately assess the truth or falsehood of a given statement, it can help individuals and organisations make more informed decisions and avoid being misled by false or misleading information.

What are the potential applications of Truth GPT?

There are many potential applications for Truth GPT across a wide range of industries and domains. Here are just a few examples:

1. Journalism and Media: Truth GPT could be used to fact-check news articles and other media content, helping to ensure that accurate and reliable information is disseminated to the public.

2. Business and Finance: Truth GPT could be used to evaluate the accuracy of financial reports and other business-related information, helping investors and other stakeholders make more informed decisions.

3. Education: Truth GPT could be used as a teaching tool to help students develop critical thinking skills and evaluate the validity of information they encounter.

4. Politics and Government: Truth GPT could be used to evaluate the veracity of political speeches and campaign promises, helping voters make more informed decisions at the ballot box.

What are the limitations of Truth GPT?

While Truth GPT represents a significant advancement in the field of natural language processing, it is not without its limitations. For example, the model's accuracy is dependent on the quality and accuracy of the data it is trained on. If the data contains inaccuracies or biases, the model's output may be similarly flawed.

Additionally, the model's ability to evaluate the truth or falsehood of a statement is limited to factual claims that can be verified by objective sources. Claims that are subjective or value-laden may be more difficult for the model to evaluate accurately.

Conclusion

In summary, Truth GPT is a new language model developed by OpenAI that aims to better distinguish between true and false statements. Unlike other AI models, Truth GPT is explicitly designed to evaluate the veracity of a given statement or piece of text, making it a potentially valuable tool for a wide range of industries and domains. While the model is not without its limitations, it represents a significant step forward in the field of natural language processing and could help to address the growing problem of fake news and misinformation online.

#JavaScript #AI #Python #Hackerrank #Motivation #React.js #Interview #Testing #SQL #Selenium #IT #LeetCode #Machine learning #Problem Solving #AWS #API #Java #GPT #TCS #Algorithms #Certifications #Github #Projects #Jobs #Django #Microservice #Node.js #Google #Story #Pip #Data Science #Postman #Health #Twitter #Elon Musk #ML

PaLM 2: Google's Multilingual, Reasoning, and Coding Model

PaLM 2: Google's Multilingual, Reasoning, and Coding ModelAuthor: neptune | 13th-May-2023

#Machine learning #AI #Google

Google introduces PaLM 2, a highly versatile language model with improved multilingual, reasoning, and coding capabilities powering over 25 Google products and features...

Comparing Chat GPT and Google Bard: Differences and Applications

Comparing Chat GPT and Google Bard: Differences and ApplicationsAuthor: neptune | 17th-Jun-2023

#Machine learning #AI #Google #GPT

Chat GPT and Google Bard are two of the most popular language models that have been developed in recent years. Both of these models are designed to generate human-like responses to text-based inputs...

7 Open Source Models From OpenAI

7 Open Source Models From OpenAIAuthor: neptune | 11th-May-2023

#Machine learning #AI

Elon Musk criticized OpenAI for becoming a closed source, profit-driven company. Despite this, OpenAI has released seven open source models, including CLIP and Dall-E...

The Godfather of AI Sounds the Alarm: Why Geoffrey Hinton Quit Google?

The Godfather of AI Sounds the Alarm: Why Geoffrey Hinton Quit Google?Author: neptune | 09th-May-2023

#Machine learning #AI

Geoffrey Hinton, the Godfather of AI, has quit Google and warned of the danger of AI, particularly the next generation AI language model, GPT-4...

Generative AI Made Easy: Explore Top 7 AWS Courses

Generative AI Made Easy: Explore Top 7 AWS CoursesAuthor: neptune | 05th-Aug-2023

#AI #AWS #Certifications

These top 7 Generative AI courses by AWS offer a pathway to explore and master the fascinating world of Generative AI...

Google Bard: A Chatbot That Generates Poetry

Google Bard: A Chatbot That Generates PoetryAuthor: neptune | 26th-Mar-2023

#Machine learning #AI #Google

Google has recently launched a new AI tool called Google Bard, which is a chatbot that can generate poetry. The chatbot is available to anyone with an internet connection, and it is free to use...

10 Essential Human Abilities: Cannot Replaced by AI

10 Essential Human Abilities: Cannot Replaced by AIAuthor: neptune | 01st-Apr-2023

#AI

AI has made remarkable progress in recent years, there are certain essential human abilities that it cannot replace. Empathy, creativity, morality, critical thinking, intuition...

Jobs at major risk due to GPT! Yours?

Jobs at major risk due to GPT! Yours?Author: neptune | 07th-Apr-2023

#Jobs #GPT

The use of GPT has the potential to replace human labor in industries such as poetry, web design, mathematics, tax preparation, blockchain, translation, and writing...

Top 5 use cases of ChatGPT in programming

Top 5 use cases of ChatGPT in programmingAuthor: neptune | 04th-Apr-2023

#AI #GPT

ChatGPT helps programmers optimize code, generate dummy data, algorithms, translate code, and format data, saving time and effort...

The Future of AI: Effective Prompt Engineering

The Future of AI: Effective Prompt EngineeringAuthor: neptune | 07th-Apr-2023

#AI #Jobs

Prompt engineering is the art of crafting effective instructions for AI models, crucial for ensuring quality, accuracy, and ethical use of AI-generated output...

Common Machine Learning Algorithms Used in the Finance Industry

Common Machine Learning Algorithms Used in the Finance IndustryAuthor: neptune | 23rd-Dec-2024

#AI #ML

The finance industry has embraced machine learning (ML) to solve complex problems and uncover patterns within vast datasets...

Liquid AI: Redesigning the Neural Network Landscape

Liquid AI: Redesigning the Neural Network LandscapeAuthor: neptune | 26th-Oct-2024

#Machine learning #AI

As AI continues to evolve, Liquid AI, an MIT spinoff, is reimagining neural networks with its innovative approach, liquid neural networks...

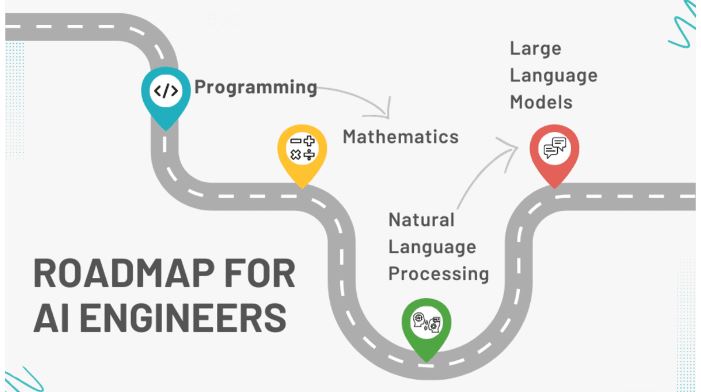

Roadmap for AI Engineers: 10 Easy Steps to Become an AI Engineer

Roadmap for AI Engineers: 10 Easy Steps to Become an AI EngineerAuthor: neptune | 22nd-Oct-2024

#Machine learning #AI

AI is transforming industries worldwide, & the demand for AI engineers has surged as companies look to incorporate ML and data-driven decisions into their operations...

AI in Agriculture: Transforming Farming with Cutting-Edge Technology

AI in Agriculture: Transforming Farming with Cutting-Edge TechnologyAuthor: neptune | 04th-Jul-2024

#AI

AI is poised to revolutionize agriculture by making farming more efficient, sustainable, and productive...

TCS Launches “GEN AI Tech Pathway” in its STEM Education Program goIT 2025

TCS Launches “GEN AI Tech Pathway” in its STEM Education Program goIT 2025Author: neptune | 13th-Jul-2025

#AI #IT #TCS

TCS’s launch of the Gen AI Tech Pathway is a clear step towards fostering AI literacy among youth, strengthening India’s position as a global digital innovation hub...

How to Train Foundation Models in Amazon Bedrock

How to Train Foundation Models in Amazon BedrockAuthor: neptune | 14th-Jul-2025

#AI #AWS

Amazon Bedrock simplifies the customization of foundation models by offering multiple techniques like fine-tuning, RAG, prompt engineering, and few-shot learning...

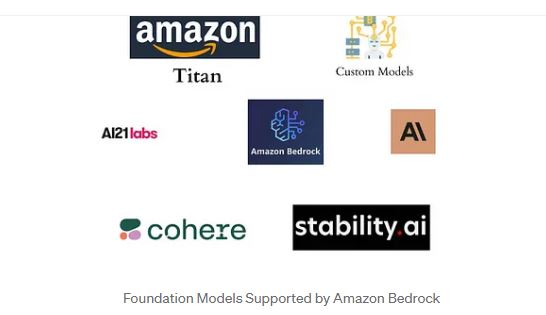

Different Types of Foundation Models Available in Amazon Bedrock

Different Types of Foundation Models Available in Amazon BedrockAuthor: neptune | 17th-Jul-2025

#AI #AWS

Amazon Bedrock offers a wide selection of foundation models from AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon Titan...

Grok xAI is more than just a chatbot – it is the gateway to a smarter, AI-driven X.com

Grok xAI is more than just a chatbot – it is the gateway to a smarter, AI-driven X.comAuthor: neptune | 17th-Jul-2025

#AI #Twitter

Elon Musk’s xAI recently unveiled Grok, a powerful conversational AI model integrated into X.com (formerly Twitter)...

New Theories on the Origins of Life: 2025 Research Challenges Old Models

New Theories on the Origins of Life: 2025 Research Challenges Old ModelsAuthor: neptune | 18th-Jul-2025

#AI

The origins of life remain one of science’s greatest mysteries. For decades, theories such as the formose reaction...

View More