Microsoft Introduces Automatic Prompt Optimization Framework for LLMs

Author: neptune | 30th-May-2023

Microsoft has once again pushed the boundaries of natural language processing (NLP) with the introduction of their latest innovation - the Automatic Prompt Optimization Framework for Language Model Pretraining (LLMs). This breakthrough technology aims to enhance the capabilities and efficiency of language models, revolutionising the way we interact with AI systems.

What are LLMs?

LLMs stands for Language Model Pretraining. It refers to a class of powerful artificial intelligence models that are trained on vast amounts of text data to understand and generate human-like language. These models have the ability to analyse and comprehend textual information, as well as generate coherent and contextually relevant responses. LLMs have gained significant attention in the field of natural language processing due to their potential applications in various domains, including chatbots, language translation, content generation, and more.

Reduce Labour Work

LLMs have gained significant attention in recent years for their ability to understand and generate human-like text. However, they require careful calibration and fine-tuning to provide accurate and contextually appropriate responses. Manual prompt engineering, the process of designing specific instructions or queries for LLMs, can be time-consuming and labour-intensive. This is where Microsoft's Automatic Prompt Optimization Framework comes into play.

Efficient and Effective !!!

The new framework leverages a combination of reinforcement learning and evolutionary algorithms to automate and optimise the prompt engineering process. Reinforcement learning enables the system to learn from interactions and feedback, while evolutionary algorithms mimic natural selection to evolve and refine prompt designs over time. The result is a more efficient and effective prompt generation process, leading to improved performance of LLMs.

Customised Prompts

One of the significant advantages of the Automatic Prompt Optimization Framework is its adaptability to various tasks and domains. Whether it's generating code, answering questions, or composing essays, the framework can be fine-tuned and customised for specific use cases. This flexibility opens up a world of possibilities, allowing LLMs to assist in a wide range of applications across industries.

By automating prompt engineering, Microsoft has not only reduced the burden on human experts but has also democratised the use of LLMs. Previously, only experts with extensive knowledge of prompt engineering could effectively utilise these models. With the new framework, even users without specialised expertise can harness the power of LLMs, making them more accessible to a broader audience.

Automatic Prompt Optimization

The Automatic Prompt Optimization Framework also addresses the issue of bias in AI systems. Bias in language models has been a concern, as they often reflect the biases present in the training data. With the framework's reinforcement learning component, biases can be mitigated through iterative feedback and correction, ensuring fair and unbiased responses. This is a crucial step towards building more inclusive and equitable AI systems.

Furthermore, the framework promotes collaboration between humans and AI systems. Instead of replacing human expertise, it augments it. The system suggests prompt designs, and human experts provide feedback and guidance, fine-tuning the model's performance. This human-in-the-loop approach ensures that the LLMs align with human values and preferences, striking a balance between automation and human control.

Microsoft commitment

Microsoft's commitment to responsible AI is evident in the Automatic Prompt Optimization Framework. The company is actively working towards providing tools and methodologies that enable developers and researchers to build ethical and transparent AI systems. By democratising access to LLMs and facilitating prompt optimization, Microsoft empowers users to leverage AI technologies responsibly.

Conclusion

In conclusion, Microsoft's Automatic Prompt Optimization Framework is a groundbreaking advancement in the field of NLP. By automating prompt engineering and optimising LLMs, the framework revolutionises the way we interact with language models. Its adaptability, bias mitigation, and human-in-the-loop approach make it a powerful tool for a wide range of applications. As we continue to explore the potential of AI, innovations like this propel us forward, opening new avenues for collaboration and advancement in natural language processing.

#JavaScript #Python #Hackerrank #AI #Motivation #React.js #Interview #Testing #SQL #Selenium #IT #LeetCode #Machine learning #Problem Solving #API #Java #AWS #GPT #TCS #Algorithms #Certifications #Github #Projects #Jobs #Django #Microservice #Node.js #Google #Story #Pip #Data Science #Postman #Health #Twitter #Elon Musk #ML

PaLM 2: Google's Multilingual, Reasoning, and Coding Model

PaLM 2: Google's Multilingual, Reasoning, and Coding ModelAuthor: neptune | 13th-May-2023

#Machine learning #AI #Google

Google introduces PaLM 2, a highly versatile language model with improved multilingual, reasoning, and coding capabilities powering over 25 Google products and features...

Comparing Chat GPT and Google Bard: Differences and Applications

Comparing Chat GPT and Google Bard: Differences and ApplicationsAuthor: neptune | 17th-Jun-2023

#Machine learning #AI #Google #GPT

Chat GPT and Google Bard are two of the most popular language models that have been developed in recent years. Both of these models are designed to generate human-like responses to text-based inputs...

7 Open Source Models From OpenAI

7 Open Source Models From OpenAIAuthor: neptune | 11th-May-2023

#Machine learning #AI

Elon Musk criticized OpenAI for becoming a closed source, profit-driven company. Despite this, OpenAI has released seven open source models, including CLIP and Dall-E...

The Godfather of AI Sounds the Alarm: Why Geoffrey Hinton Quit Google?

The Godfather of AI Sounds the Alarm: Why Geoffrey Hinton Quit Google?Author: neptune | 09th-May-2023

#Machine learning #AI

Geoffrey Hinton, the Godfather of AI, has quit Google and warned of the danger of AI, particularly the next generation AI language model, GPT-4...

Generative AI Made Easy: Explore Top 7 AWS Courses

Generative AI Made Easy: Explore Top 7 AWS CoursesAuthor: neptune | 05th-Aug-2023

#AI #AWS #Certifications

These top 7 Generative AI courses by AWS offer a pathway to explore and master the fascinating world of Generative AI...

Google Bard: A Chatbot That Generates Poetry

Google Bard: A Chatbot That Generates PoetryAuthor: neptune | 26th-Mar-2023

#Machine learning #AI #Google

Google has recently launched a new AI tool called Google Bard, which is a chatbot that can generate poetry. The chatbot is available to anyone with an internet connection, and it is free to use...

Jobs at major risk due to GPT! Yours?

Jobs at major risk due to GPT! Yours?Author: neptune | 07th-Apr-2023

#Jobs #GPT

The use of GPT has the potential to replace human labor in industries such as poetry, web design, mathematics, tax preparation, blockchain, translation, and writing...

10 Essential Human Abilities: Cannot Replaced by AI

10 Essential Human Abilities: Cannot Replaced by AIAuthor: neptune | 01st-Apr-2023

#AI

AI has made remarkable progress in recent years, there are certain essential human abilities that it cannot replace. Empathy, creativity, morality, critical thinking, intuition...

Top 5 use cases of ChatGPT in programming

Top 5 use cases of ChatGPT in programmingAuthor: neptune | 04th-Apr-2023

#AI #GPT

ChatGPT helps programmers optimize code, generate dummy data, algorithms, translate code, and format data, saving time and effort...

The Future of AI: Effective Prompt Engineering

The Future of AI: Effective Prompt EngineeringAuthor: neptune | 07th-Apr-2023

#AI #Jobs

Prompt engineering is the art of crafting effective instructions for AI models, crucial for ensuring quality, accuracy, and ethical use of AI-generated output...

Common Machine Learning Algorithms Used in the Finance Industry

Common Machine Learning Algorithms Used in the Finance IndustryAuthor: neptune | 23rd-Dec-2024

#AI #ML

The finance industry has embraced machine learning (ML) to solve complex problems and uncover patterns within vast datasets...

Liquid AI: Redesigning the Neural Network Landscape

Liquid AI: Redesigning the Neural Network LandscapeAuthor: neptune | 26th-Oct-2024

#Machine learning #AI

As AI continues to evolve, Liquid AI, an MIT spinoff, is reimagining neural networks with its innovative approach, liquid neural networks...

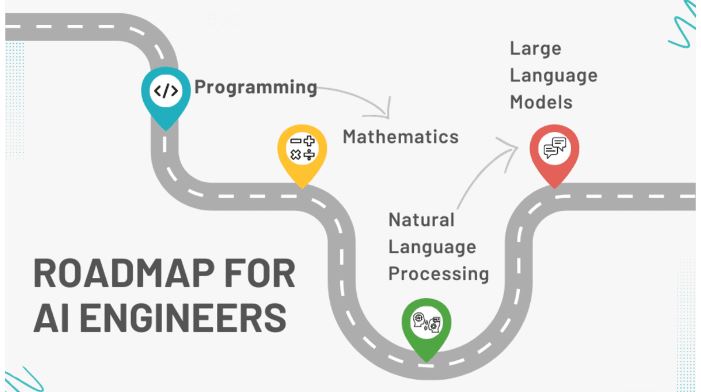

Roadmap for AI Engineers: 10 Easy Steps to Become an AI Engineer

Roadmap for AI Engineers: 10 Easy Steps to Become an AI EngineerAuthor: neptune | 22nd-Oct-2024

#Machine learning #AI

AI is transforming industries worldwide, & the demand for AI engineers has surged as companies look to incorporate ML and data-driven decisions into their operations...

AI in Agriculture: Transforming Farming with Cutting-Edge Technology

AI in Agriculture: Transforming Farming with Cutting-Edge TechnologyAuthor: neptune | 04th-Jul-2024

#AI

AI is poised to revolutionize agriculture by making farming more efficient, sustainable, and productive...

TCS Launches “GEN AI Tech Pathway” in its STEM Education Program goIT 2025

TCS Launches “GEN AI Tech Pathway” in its STEM Education Program goIT 2025Author: neptune | 13th-Jul-2025

#AI #IT #TCS

TCS’s launch of the Gen AI Tech Pathway is a clear step towards fostering AI literacy among youth, strengthening India’s position as a global digital innovation hub...

How to Train Foundation Models in Amazon Bedrock

How to Train Foundation Models in Amazon BedrockAuthor: neptune | 14th-Jul-2025

#AI #AWS

Amazon Bedrock simplifies the customization of foundation models by offering multiple techniques like fine-tuning, RAG, prompt engineering, and few-shot learning...

View More