Meta’s new LIMA language model reaches GPT-4 level

Author: neptune | 30th-May-2023

In a groundbreaking development, Meta's AI researchers have unveiled their latest creation, the LIMA language model. This remarkable achievement pushes the boundaries of natural language processing (NLP) as LIMA attains performance levels comparable to GPT-4 and Bard, despite being fine-tuned with only a limited number of examples. The acronym LIMA stands for "Less is More for Alignment," and it aptly reflects the model's purpose of demonstrating that exceptional results can be achieved with just a handful of pre-training examples.

Refinement through Selective Examples

The Meta research team set out to refine their existing 65-billion-parameter LLaMA model, which gained notoriety as the leaked language model that initiated the open-source language model movement. In a departure from OpenAI's approach, Meta chose to forgo the resource-intensive Reinforcement Learning from Human Feedback (RLHF) method used for model tuning. Instead, they relied on a mere 1000 carefully selected examples for fine-tuning. This decision challenges the conventional wisdom that extensive human feedback training is indispensable for advancing AI capabilities, as Meta emphasises in their research paper.

The Superficial Alignment Hypothesis

Meta's research introduces a fascinating concept known as the "superficial alignment hypothesis." According to this theory, the post-pre-training alignment phase primarily teaches the model specific styles or formats that it can reproduce during interactions with users. Therefore, fine-tuning becomes more about capturing the desired style rather than substantial content. This notion contradicts the prevalent practice of employing intricate and protracted fine-tuning processes, such as OpenAI's RLHF.

A Game-Changer in Language Modeling

Meta's groundbreaking LIMA language model represents a significant step forward in the field of NLP. By aiming to match the performance levels of GPT-4 and Bard, LIMA showcases Meta's commitment to pushing the boundaries of AI capabilities. Built upon the foundation of the impressive 65 billion parameter LLaMA model, LIMA distinguishes itself by utilising a minimalist approach to fine-tuning with only 1000 carefully chosen examples. This departure from the resource-intensive RLHF method utilised by OpenAI challenges the prevailing belief in the indispensability of extensive human feedback training.

Power of their innovative approach

Meta's research team concludes that RLHF may not be as crucial as previously assumed, signalling a potential paradigm shift in the development of AI language models. With LIMA, Meta has not only demonstrated the power of their innovative approach but also paved the way for further advancements in language modelling that prioritise efficiency without compromising on quality. The stage is set for a new era in NLP, one where less is indeed more for achieving alignment and driving the next wave of AI breakthroughs.

Conclusion

In a groundbreaking achievement, Meta's AI researchers have introduced the LIMA language model, reaching the performance level of GPT-4 and Bard. Fine-tuned with a minimal number of examples, LIMA challenges the traditional belief that extensive human feedback training is essential for advancing AI capabilities. Meta's research introduces the concept of the "superficial alignment hypothesis," suggesting that fine-tuning is primarily about capturing style rather than substance. By diverging from the resource-intensive RLHF method employed by OpenAI, Meta's LIMA model showcases the potential for efficiency without compromising quality. This breakthrough signifies a paradigm shift in language modelling and sets the stage for a new era in NLP, where less can indeed achieve more in driving AI advancements.

#JavaScript #Python #Hackerrank #AI #Motivation #React.js #Interview #Testing #SQL #Selenium #IT #LeetCode #Machine learning #Problem Solving #API #Java #AWS #GPT #TCS #Algorithms #Certifications #Github #Projects #Jobs #Django #Microservice #Node.js #Google #Story #Pip #Data Science #Postman #Health #Twitter #Elon Musk #ML

PaLM 2: Google's Multilingual, Reasoning, and Coding Model

PaLM 2: Google's Multilingual, Reasoning, and Coding ModelAuthor: neptune | 13th-May-2023

#Machine learning #AI #Google

Google introduces PaLM 2, a highly versatile language model with improved multilingual, reasoning, and coding capabilities powering over 25 Google products and features...

Comparing Chat GPT and Google Bard: Differences and Applications

Comparing Chat GPT and Google Bard: Differences and ApplicationsAuthor: neptune | 17th-Jun-2023

#Machine learning #AI #Google #GPT

Chat GPT and Google Bard are two of the most popular language models that have been developed in recent years. Both of these models are designed to generate human-like responses to text-based inputs...

7 Open Source Models From OpenAI

7 Open Source Models From OpenAIAuthor: neptune | 11th-May-2023

#Machine learning #AI

Elon Musk criticized OpenAI for becoming a closed source, profit-driven company. Despite this, OpenAI has released seven open source models, including CLIP and Dall-E...

The Godfather of AI Sounds the Alarm: Why Geoffrey Hinton Quit Google?

The Godfather of AI Sounds the Alarm: Why Geoffrey Hinton Quit Google?Author: neptune | 09th-May-2023

#Machine learning #AI

Geoffrey Hinton, the Godfather of AI, has quit Google and warned of the danger of AI, particularly the next generation AI language model, GPT-4...

Generative AI Made Easy: Explore Top 7 AWS Courses

Generative AI Made Easy: Explore Top 7 AWS CoursesAuthor: neptune | 05th-Aug-2023

#AI #AWS #Certifications

These top 7 Generative AI courses by AWS offer a pathway to explore and master the fascinating world of Generative AI...

Google Bard: A Chatbot That Generates Poetry

Google Bard: A Chatbot That Generates PoetryAuthor: neptune | 26th-Mar-2023

#Machine learning #AI #Google

Google has recently launched a new AI tool called Google Bard, which is a chatbot that can generate poetry. The chatbot is available to anyone with an internet connection, and it is free to use...

10 Essential Human Abilities: Cannot Replaced by AI

10 Essential Human Abilities: Cannot Replaced by AIAuthor: neptune | 01st-Apr-2023

#AI

AI has made remarkable progress in recent years, there are certain essential human abilities that it cannot replace. Empathy, creativity, morality, critical thinking, intuition...

Top 5 use cases of ChatGPT in programming

Top 5 use cases of ChatGPT in programmingAuthor: neptune | 04th-Apr-2023

#AI #GPT

ChatGPT helps programmers optimize code, generate dummy data, algorithms, translate code, and format data, saving time and effort...

The Future of AI: Effective Prompt Engineering

The Future of AI: Effective Prompt EngineeringAuthor: neptune | 07th-Apr-2023

#AI #Jobs

Prompt engineering is the art of crafting effective instructions for AI models, crucial for ensuring quality, accuracy, and ethical use of AI-generated output...

Common Machine Learning Algorithms Used in the Finance Industry

Common Machine Learning Algorithms Used in the Finance IndustryAuthor: neptune | 23rd-Dec-2024

#AI #ML

The finance industry has embraced machine learning (ML) to solve complex problems and uncover patterns within vast datasets...

Liquid AI: Redesigning the Neural Network Landscape

Liquid AI: Redesigning the Neural Network LandscapeAuthor: neptune | 26th-Oct-2024

#Machine learning #AI

As AI continues to evolve, Liquid AI, an MIT spinoff, is reimagining neural networks with its innovative approach, liquid neural networks...

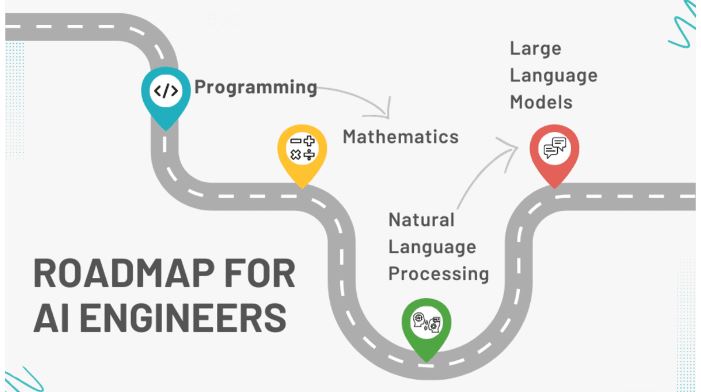

Roadmap for AI Engineers: 10 Easy Steps to Become an AI Engineer

Roadmap for AI Engineers: 10 Easy Steps to Become an AI EngineerAuthor: neptune | 22nd-Oct-2024

#Machine learning #AI

AI is transforming industries worldwide, & the demand for AI engineers has surged as companies look to incorporate ML and data-driven decisions into their operations...

AI in Agriculture: Transforming Farming with Cutting-Edge Technology

AI in Agriculture: Transforming Farming with Cutting-Edge TechnologyAuthor: neptune | 04th-Jul-2024

#AI

AI is poised to revolutionize agriculture by making farming more efficient, sustainable, and productive...

TCS Launches “GEN AI Tech Pathway” in its STEM Education Program goIT 2025

TCS Launches “GEN AI Tech Pathway” in its STEM Education Program goIT 2025Author: neptune | 13th-Jul-2025

#AI #IT #TCS

TCS’s launch of the Gen AI Tech Pathway is a clear step towards fostering AI literacy among youth, strengthening India’s position as a global digital innovation hub...

How to Train Foundation Models in Amazon Bedrock

How to Train Foundation Models in Amazon BedrockAuthor: neptune | 14th-Jul-2025

#AI #AWS

Amazon Bedrock simplifies the customization of foundation models by offering multiple techniques like fine-tuning, RAG, prompt engineering, and few-shot learning...

View More